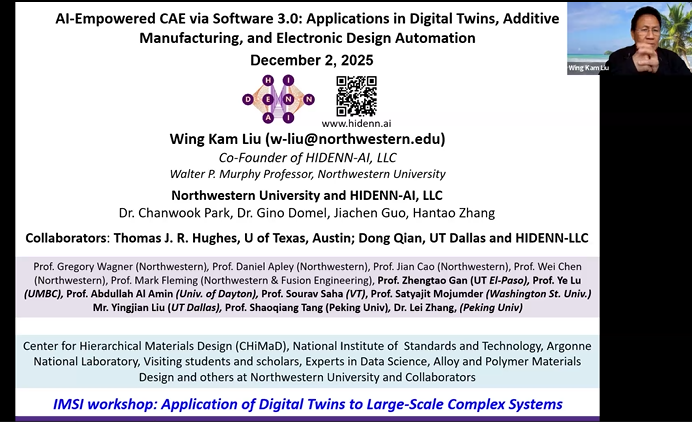

AI-empowered CAE via software 3.0: applications in digital twins, additive manufacturing, and electron design automation

Presenter

December 2, 2025

Event: 59629

Abstract

Introduction

Software development is undergoing a paradigm shift from explicit programming (“Software 1.0”) toward software learned from data (“Software 2.0”) [1]. This evolution has now progressed to the era of “Software 3.0,” in which natural language serves as the programming interface and large pretrained models handle the rest [2]. In this new paradigm, large language models (LLMs) function as versatile general purpose reasoning engines, allowing developers to articulate goals in plain English and have the model generate the corresponding solutions [3]. The impact of this shift extends far beyond computer science: foundation models are already driving advances in biomedical research and healthcare [4], education [5, 6], and high-stakes fields such as finance, consulting, and law [7].

Drawing an analogy from engineering software in computer-aided engineering (CAE), Software 1.0 corresponds to classical finite element analysis (FEA) solvers, where engineers hand-coded numerical formulations (e.g., Ansys, Abaqus, etc.) [8]. Software 2.0 introduced data-driven surrogate modelling, where machine learning was used to approximate high-fidelity simulations [9]. Software 3.0 aims to combine the strengths of both worlds: embedding engineering domain knowledge into LLMs that can understand context, retrieve relevant information from a stack of domain expertise, and generate solutions or insights on demand. While recent uses of LLMs in CAE have automated tasks such as CAD model generation or FEM simulations [10], these applications largely replicate tasks that human experts can already perform. In this talk, we introduce how the Software 3.0 framework can convey transformative advances CAE, highlighting its applications to digital twins, additive manufacturing, and electronic design automation [11].

Software 3.0 in CAE

2.1. Agentic AI via model context protocol (MCP) for digital twins

Agentic AI refers to AI systems that move beyond passively assisting engineers and instead act as autonomous collaborators capable of planning, executing, and adapting CAE workflows. Enabled by the Model Context Protocol (MCP), which standardizes communication between LLMs and software tools, such systems can dynamically invoke functions for geometry import, meshing, solver execution, data processing, and surrogate modelling. This workflow supports the creation and operation of digital twins by automating decision-making steps, such as selecting sampling strategies or training models, that are typically problem dependent and reached through trial and error. As MCP tools accumulate into a scalable knowledge base, annotated by engineers and interpretable by LLMs, agentic AI can leverage this collective expertise to accelerate exploration of parameter spaces, enhance computational efficiency, and deliver adaptive digital twin simulations.

2.2. Automated solver development for intrusive model order reduction (MOR) for space-parameter-time forward and inversed engineering problems

A transformative opportunity for CAE lies in automated solver development for intrusive model order reduction (MOR), which reduces the computational burden of large-scale simulations that prevail in additive manufacturing and electronic design automation while preserving accuracy. Unlike non-intrusive data-driven surrogates, intrusive MOR directly modifies governing equations to create efficient, data-free reduced-order models, but its adoption has been limited by the difficulty of redeveloping complex solvers. Recent advances in LLMs offer a pathway to overcome this barrier by automating algebraic derivations, code restructuring, and solver implementation, enabling engineers to generate high-fidelity reduced-order models with natural language prompts. We demonstrate this capability through Tensor-decomposition-based A Priori Surrogates (TAPS) [12], showing how LLMs can reduce development effort while meeting key performance metrics of accuracy, speed, and efficiency. This workflow holds promise for accelerating digital twin applications in additive manufacturing and electronic design automation, where scalable and adaptive reduced-order models are critical for design and optimization.

Wing Kam Liu*, Chanwook Park+, Jiachen Guo+, Gino Domel*, Hantao Zhang+

*Northwestern University, HIDENN-AI, LLC

+Northwestern University

References

Karpathy, A., Software 2.0. 2017.

Karpathy, A., Software 3.0. 2025.

Raiaan, M.A.K., et al., A review on large language models: Architectures, applications, taxonomies, open issues and challenges. IEEE access, 2024. 12: p. 26839-26874.

Nazi, Z.A. and W. Peng. Large language models in healthcare and medical domain: A review. in Informatics. 2024. MDPI.

Kasneci, E., et al., ChatGPT for good? On opportunities and challenges of large language models for education. Learning and individual differences, 2023. 103: p. 102274.

Jeon, J. and S. Lee, Large language models in education: A focus on the complementary relationship between human teachers and ChatGPT. Education and Information Technologies, 2023. 28(12): p. 15873-15892.

Chen, Z.Z., et al., A survey on large language models for critical societal domains: Finance, healthcare, and law. arXiv preprint arXiv:2405.01769, 2024.

Liu, W.K., S. Li, and H.S. Park, Eighty years of the finite element method: Birth, evolution, and future. Archives of Computational Methods in Engineering, 2022. 29(6): p. 4431-4453.

Guo, J., et al., Interpolating Neural Network-Tensor Decomposition (INN-TD): a scalable and interpretable approach for large-scale physics-based problems. arXiv preprint arXiv:2503.02041, 2025.

Zhang, L., et al., Large language models for computer-aided design: A survey. arXiv preprint arXiv:2505.08137, 2025.

Guo, J., et al., AI-Empowered CAE via Software 3.0. In prep, 2025.

Guo, J., et al., Tensor-decomposition-based A Priori Surrogate (TAPS) modeling for ultra large-scale simulations. Computer Methods in Applied Mechanics and Engineering, 2025. 444: p. 118101.